Developing a Sign Language Translator Using XR Hand Tracking

Welcome to Portals United! / Forums / Troubleshooting / Developing a Sign Language Translator Using XR Hand Tracking

- This topic has 5 replies, 2 voices, and was last updated 6 months, 2 weeks ago by

robert.malzan.

-

AuthorPosts

-

Hi,

We’re developing a solution for people who use sign language. It’s basically a translator for those who don’t know sign language. In order for somone to communicate in sign language, they need to use their hands, but we couldn’t find this option in the project (it seems like only controller interactions are active).

Currently, we’re able to do this via Unity’s XR Hands package, so it would be nice to use similar libraries or any alternative you can provide. We need the x,y and z data of the hand’s key points for our product.-

This topic was modified 8 months, 2 weeks ago by

Sametk13.

-

This topic was modified 8 months, 2 weeks ago by

Sametk13.

-

This topic was modified 8 months, 2 weeks ago by

Sametk13.

-

This topic was modified 8 months, 2 weeks ago by

Sametk13.

-

This topic was modified 8 months, 2 weeks ago by

Sametk13.

March 14, 2025 at 4:44 pm #1321We are currently evaluating when we can build this into the system. No fixed date but we definitely want to support hands.

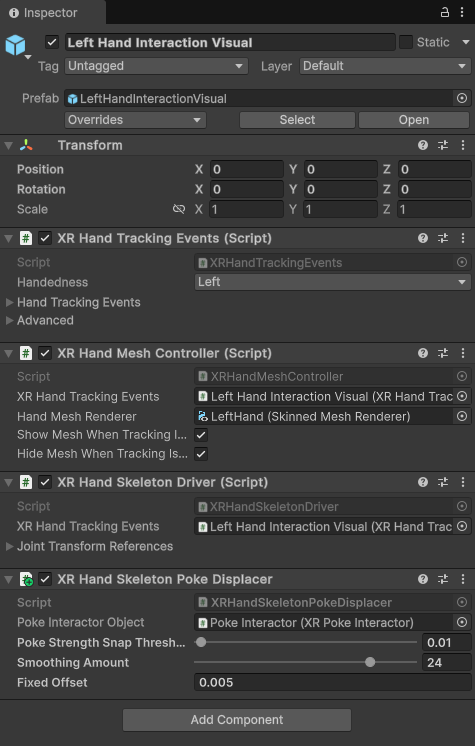

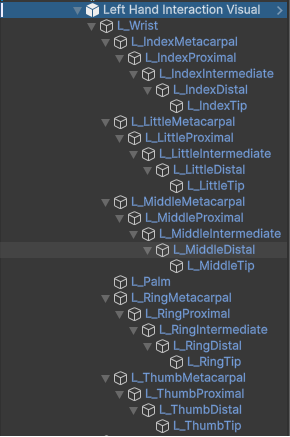

April 11, 2025 at 11:51 am #1455Hand tracking is now implemented in Release 0.2.2. You can download it now. The setup is as follows:

In the hierarchy, you’ll find

Hope this helps!

Bob

Thanks for the quick update.

Could you please guide us on how to access and use the hand tracking data, especially the x, y, z coordinates of key hand points (such as the wrist, fingers, etc.)? We are using this data to build a sign language translator, and it’s important for us to interpret hand gestures accurately.

We look forward to your support!

May 13, 2025 at 6:25 am #1539Hello Sametk13,

could you be a bit more specific as to how you would solve this algorithm in a Unity environment? I already pointed you to the hand hierarchy and the XR Hand Skeleton driver. What I need to know is how exactly you would recognize the gesture if you had all the position and orientation (rotation) information of both hand skeletons.

The

XRHandSkeletonDriveractually has a publicList<JointToTransformReference> jointTransformReferences;which you can use to detect all the information you may need. In your code you need to beusing UnityEngine.XR.Handsto have access to theXRHandSkeletonDriverCurrently, I am assuming you’ll create a specific Node which reads both hands and resolves the changes to the hands/fingers as a gesture which you can then output as a text which you can then display on a screen.The Node script is added to the World Builder who will compile it at runtime. The same (Node) script will also be running on the Portal Hopper’s side to actively interpret and display the detected hand signals. Correct?

-

This topic was modified 8 months, 2 weeks ago by

-

AuthorPosts

- You must be logged in to reply to this topic.