robert.malzan

Forum Replies Created

-

AuthorPosts

-

June 6, 2025 at 1:40 pm #1678

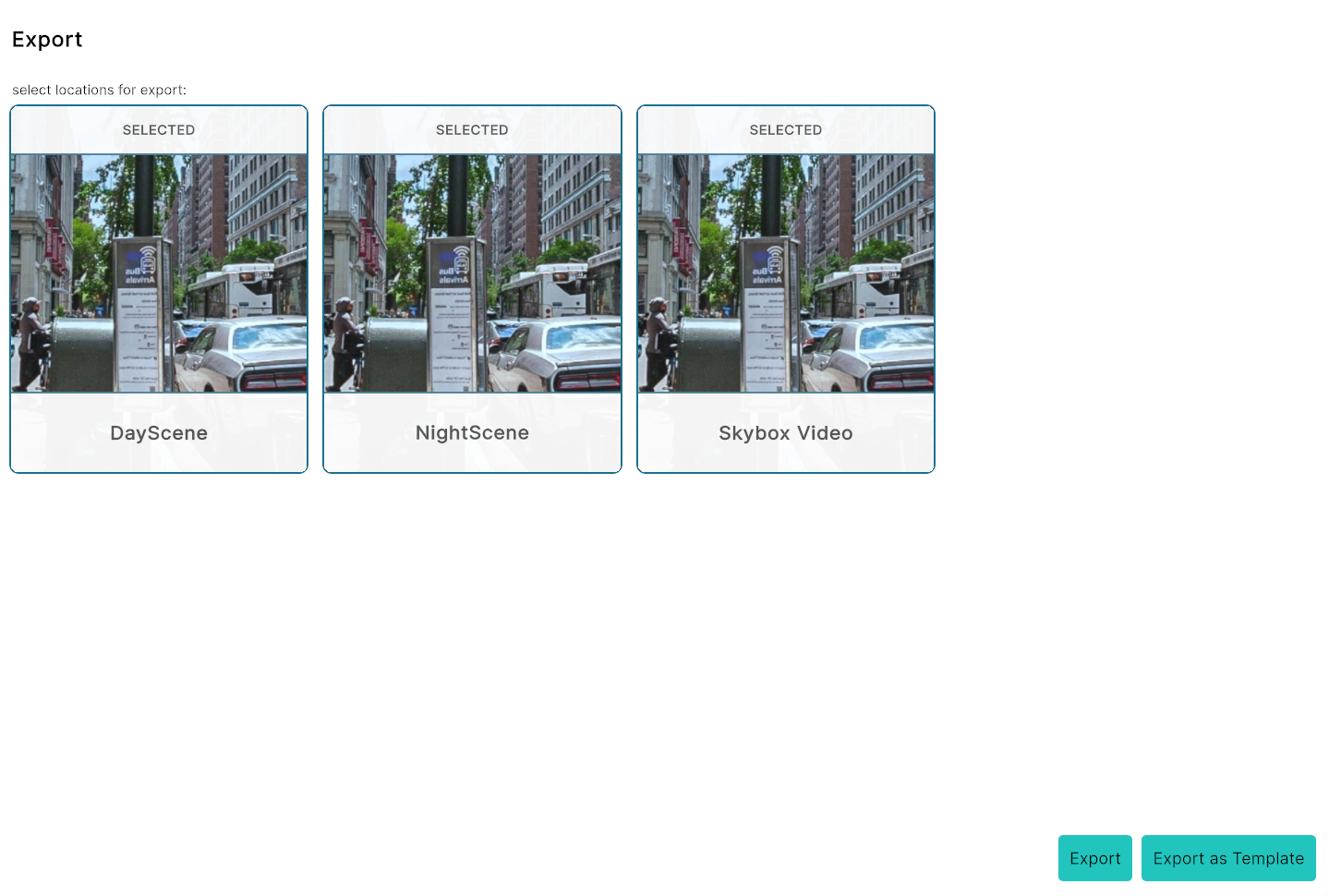

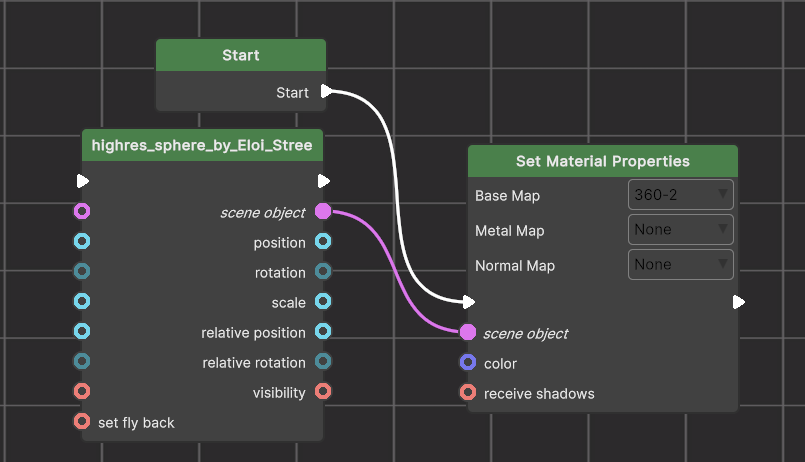

The skybox bug should be fixed. But we also have a dynamic “skybox” feature for 360° panoramic images. You can load the panoramic image as texture and drop it onto a sphere (we now have a sphere as a standard 3D model). Or dynamicallly assign the texture through the Set Material Properties Node:

Just swap the Base Map.

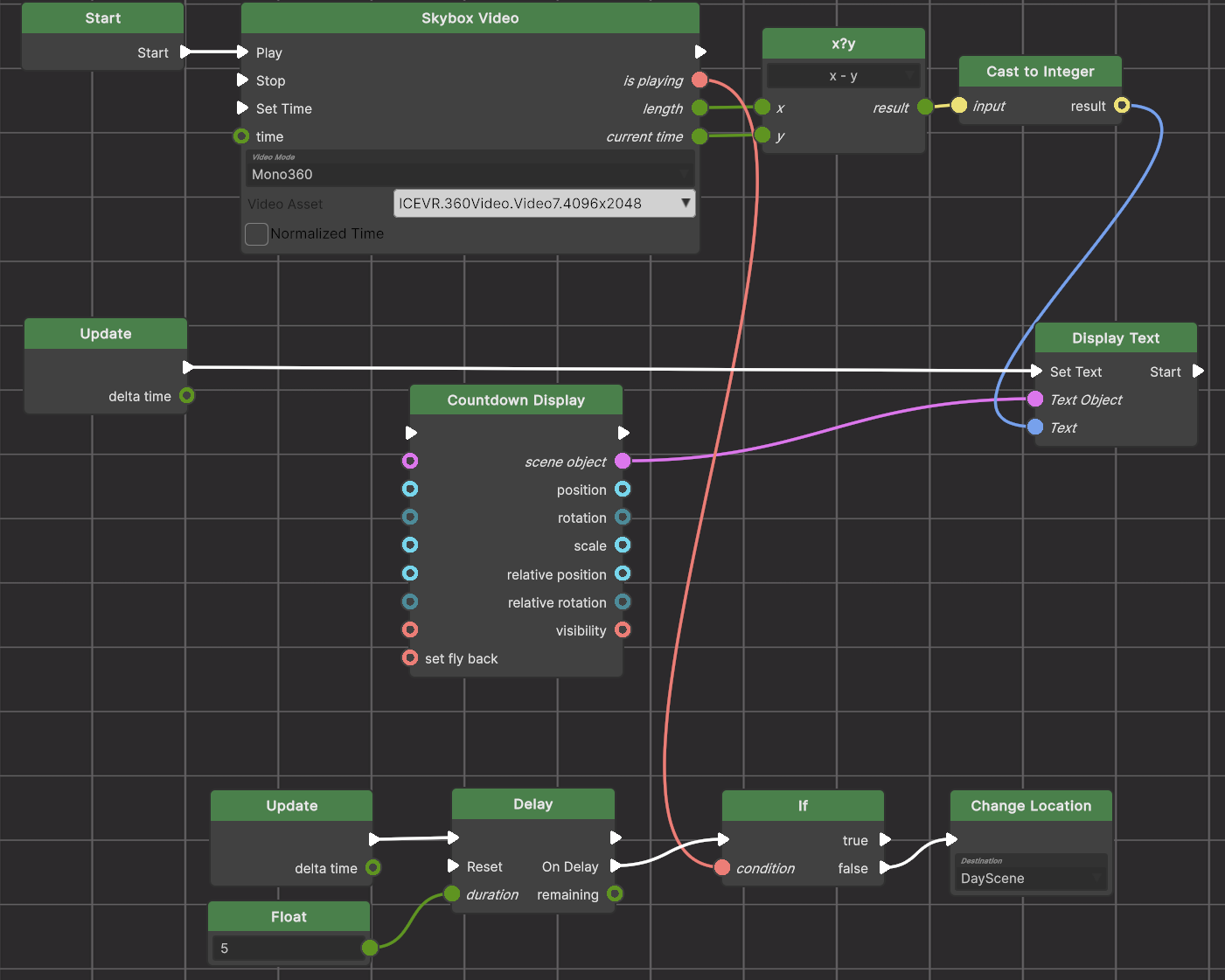

Now you can also have a 360° video as a skybox:

This graph plays a monoscopic 360° video in the skybox. It displays a countdown and changes location once the video has finished playing.

The Delay Node makes sure that the graph doesn’t immediately go to the next location because the player needs a moment to have a “playing” status, so we check that status after 5 seconds for the first time.

June 5, 2025 at 9:58 am #1664Only the first one to avoid confusion which VRML link should be clicked on. But you must do this manually. If you use the XR4ED plugin to publish, then the links will be all wrong. You use the custom publishing with the root domain you are using. Then upload only the first VRML file and a picture and descriptions.

June 4, 2025 at 4:36 pm #1661Yes, I have it on my ToDo list now, but we have a huge backlog and I doubt you’ll get this functionality in time.

June 4, 2025 at 4:34 pm #1660I tried using multiple flow outputs in the way you are trying to use it and also got errors. It seems that at runtime, you (currently?) cannot call RunFlowOutput(). However, you might be able to call theNextNodesInput = FindInput(m_FlowOutput1.InputReference) if the flow output has a vaild reference (HasValidReference(m_FlowOutput1) is true if that output is connected to something)

Then you “manually” call the inputs Run action as in theNextNodesInput.Run(); I think this should work.

June 4, 2025 at 3:58 pm #1657Use Change Location. Open Link will attempt to start the Hopper, because the file ends in VRML and Open Link will automatically start the matching app. .txt runs the text editor and .ppt launches the PowerPoint app. But the Hopper is already running, so Open Link will fail.

Let’s assume that you have a server/domain named MyDomain.com and on that server a public folder named xr4ed. Place the other 3 vrml files there. Let’s say they are named vrml2.vrml, vrml3.vrml, and vrml4.vrml.

The nodes which call those links would then call the external link http://MyDomain.com/xr4ed/vrml2.vrml for the second vrml etc.

You could even place a duplicate of vrml1.vrml there so you can create a “round trip” going back to the first location after the third location.

If you did that, you could also publish the link even in this forum (or in a pdf or ppt etc…) as an entry point to the hopper. Just add hopper: to the link. The address of the entry point vrml (first location) would then be published as hopper:http://MyDomain.com/xr4ed/vrml1.vrml – share with your friends (who have a hopper installed)!

You can test it here

-

This reply was modified 8 months, 1 week ago by

robert.malzan.

June 4, 2025 at 9:39 am #1654Actually, it’s not going to work in the shop. Do you have access to a server where you can store some files for public read access? If so, you can use the Nodes for switching between locations as expected. But in the shop, you would have to know the download URL of the corresponding vrml file locations within the shop. However, these names are hidden and it gets too complex to unravel those hidden references. Uploading the project to the shop would present the user with one link for each vrml file. So you could theoretically jump to any one of those locations directly by clicking on one of those vrml file links.

Instead, upload your project files to some other location and upload only the vrml file which points to the first location into the shop. Of course, in that vrml file you have a link which points to the data on your own (or hosted) server.

May 27, 2025 at 5:10 pm #1635Cool 🙂 (I always have to have the last word because that’s how I know that all questions have been answered. there is no way to ‘close’ a thread here…)

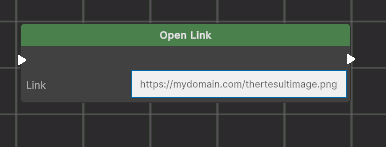

May 27, 2025 at 5:08 pm #1634Tomorrow we will publish a new release which has a new node type called Open Link

I think this may be what you are looking for.

May 27, 2025 at 5:03 pm #1633Actually, the second method should work RunFlowOutput(m_FlowOutput1) …

What are your error messages?

However, why not create a task node with 2 (3, in your example) tasks? At the end you just call “Next Task” and you get almost the same result, except that the second flow is executed 1 frame later.

-

This reply was modified 8 months, 1 week ago by

-

AuthorPosts